Understanding free and top memory statistics [Update]

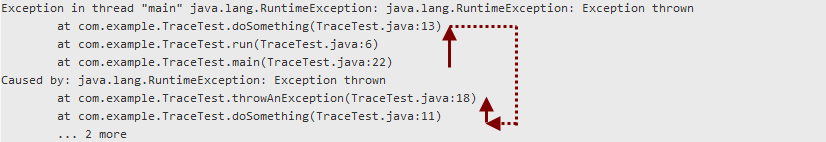

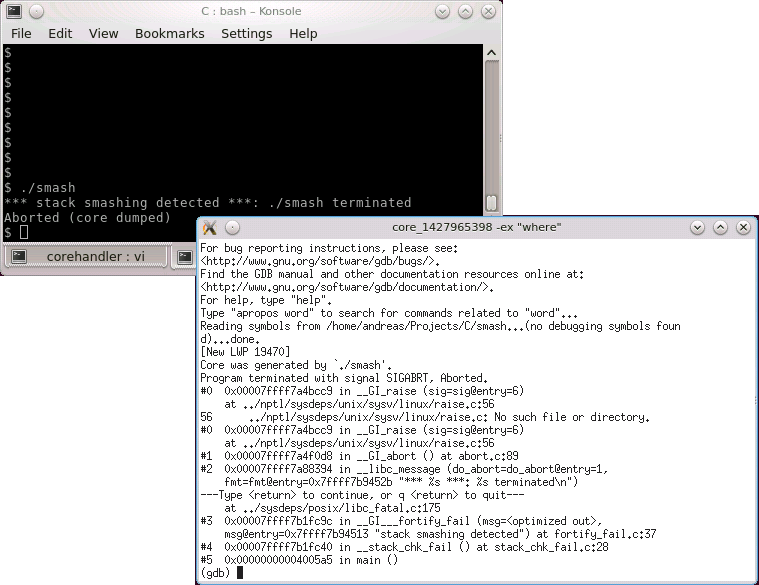

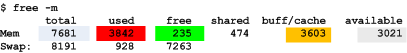

Both top and free can be used to gather basic information about memory usage, but each of them reports the statistics in a slightly different way which might not be directly obvious. An example output of free, using the -m switch to report numbers in MiB instead of KiB, is as follows:

Lets ignore the last line (Swap – it simply shows the total swap space and how much from that swap space is allocated and how much is still free) and focus on physical memory: The first three numbers in the Mem: line are straight forward: the “total” column shows the total physical memory available (most likely, this system has 8 GiB installed and uses a part of it for its graphics device, hence the “total” column shows less than 8 GiB). The “used” column shows the amount of memory which is currently in use, and the “free” column shows the amount which is still available. Then, there are the “buffers” and “cached” columns – they show how much from the “used” memory is really used for buffers and caches. Buffers and caches is memory which the kernel uses for temporary data – if an application requires more memory, and there is no memory “free” anymore, the kernel can still use this temporary memory and assign it to application processes (probably resulting in lower I/O performance since there is not as much cache memory available now). Finally, there is the “+/- buffers/cache” line: This might look strange first, but what it does is that it also reports the “used” and “free” memory, without the buffers and caches – as said above, buffer and cache memory is dynamic and can be assigned to an application process, if required. Hence, the “+/- buffers/cache” line actually shows the memory which is used by and available for processes. The following diagram shows the memory allocation from the sample above:

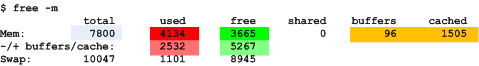

top returns almost the same information, in a slightly different layout (note that the numbers are somewhat different since some time has elapsed between the execution of the two commands):

The main difference is that it does not directly show the “used” and “free” memory without the buffers – but this can be easily calculated. Another thing which looks strange is that the amount of “cached” memory is shown in the “Swap” line – however, it has nothing to do with swap, probably it has been put there to use the available screen area as efficient as possible.

Update: procps >= 3.3.10

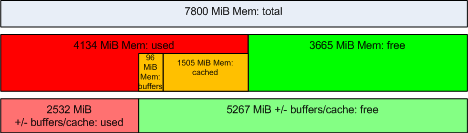

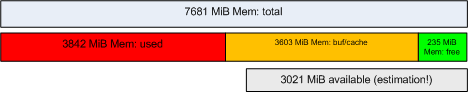

Starting with procps 3.3.10, the output of free has changed which might cause some confusion. I came across this through a question on StackOverflow: Linux “free -m”: Total, used and free memory values don’t add up. Essentially, free does not show the “+/- buffers/cache” line anymore, but instead shows an “available” column which is taken from the MemAvailable metric which has been introduced with kernel 3.14. See https://www.kernel.org/doc/Documentation/filesystems/proc.txt for a complete description:

MemAvailable: An estimate of how much memory is available for starting new applications, without swapping. Calculated from MemFree, SReclaimable, the size of the file LRU lists, and the low watermarks in each zone. The estimate takes into account that the system needs some page cache to function well, and that not all reclaimable slab will be reclaimable, due to items being in use. The impact of those factors will vary from system to system.The new format of the free output looks like this:

The main difference is that the “buff/cache” values are not part of “Used” anymore, but counted separately. Hence, the total memory is calculated as “used + buff/cache + free”:

Since the “available” value is an estimation which considers some system specific factors, it can not directly be calculated from the other values which are shown by free.